It’s becoming clear to insurers that AI isn’t optional. It’s already embedded in everything from spam filters to customer support chatbots to risk models.

But one concern I keep hearing (and rightly so) is about operationalizing AI. Specifically, how do you trust its accuracy? How do you know it isn’t biased?

This is where a lot of organizations hit a wall. The tech might look impressive, but when it comes to real-world use, the questions start piling up. AI governance teams often struggle to figure out if what they’re being sold is actually fair, reliable, or even usable at scale.

These concerns are real. And they’re exactly why I believe every AI vendor should be required to go through independent, third-party validation.

Here’s why that matters:

AI is moving fast, but implementing real, sustainable solutions isn’t just about speed. It’s about building responsibly.

If a vendor isn’t willing to let an independent party kick the tires and see what actually works, there’s a good chance they’re riding the hype of AI solutions, rather than offering real solutions.

You test AI in insurance the same way you’d evaluate any serious tool: you define what matters, and then you stress test it under real-world conditions.

DigitalOwl recently worked with David Schraub F.S.A., M.A.A.A., C.E.R.A., a leading actuary and AI regulatory expert, to perform an independent assessment of our platform.

Here’s how he tested for bias:

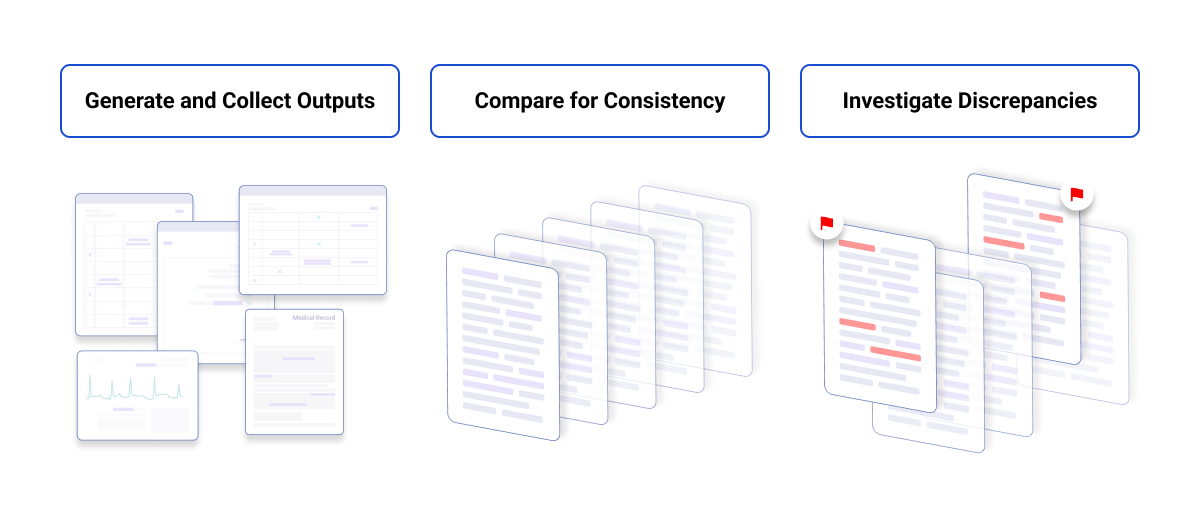

To evaluate accuracy and consistency, he also performed the following:

Different types of AI vendors will require different types of testing. But for an AI medical record review platform, this kind of evaluation is critical. It’s the only way to ensure the system performs accurately, without bias or discrimination, in real-world scenarios.

To see the results of DigitalOwl’s third-party evaluation and to learn more about what goes into a rigorous, independent review of an AI solution, download our Bias and Accuracy Guide.